2022年に最も引用されたAI論文100選を公開 米国、中国に続くAI研究大国はどこ?:公開論文数はGoogle一強だが、注目の論文はOpenAIが占める

Zeta Alphaは、2022年に最も引用されたAIに関する100本の論文リストや世界各国のAI論文発表数などの調査結果を公開した。

この記事は会員限定です。会員登録(無料)すると全てご覧いただけます。

エンタープライズ向けのAIソリューション開発支援を行うZeta Alphaは2023年3月8日(米国時間)、2022年に最も引用されたAIに関する100本の論文リストや世界各国のAI論文発表数などの調査結果を公開した。

Zeta Alphaは、同社プラットフォームからのデータと慎重な人的キュレーション(方法については後述)を用いて、2022年、2021年、2020年のAI分野の上位引用論文を集め、著者の所属と国名を分析した。これにより、純粋な出版量ではなく、研究開発へのインパクトで順位をつけることができるという。

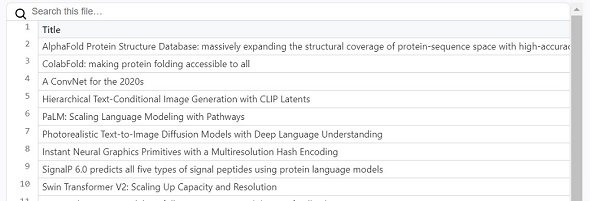

同社ブログではまず、2022年から2020年の間に公開された引用数の多い論文を幾つか紹介した。

2022年

- 1.AlphaFold Protein Structure Database: massively expanding the structural coverage of protein-sequence space with high-accuracy models(執筆:DeepMind、引用数:1372)

- 2.ColabFold: making protein folding accessible to all(執筆:複数の機関、引用数:1162)

- 3.Hierarchical Text-Conditional Image Generation with CLIP Latents(執筆:OpenAI、引用数:718)

- 4.A ConvNet for the 2020s(執筆:Meta、カリフォルニア大学バークレー校、引用数:690)

- 5.PaLM: Scaling Language Modeling with Pathways(執筆:Google、引用数:452引用)

2021年

- 1.Highly accurate protein structure prediction with AlphaFold(執筆:DeepMind、引用数:8965)

- 2.Swin Transformer: Hierarchical Vision Transformer using Shifted Windows(執筆:Microsoft、引用数:4810)

- 3.Learning Transferable Visual Models From Natural Language Supervision(執筆:OpenAI、引用数:3204)

- 4.On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?(執筆:ワシントン大学、Black in AI、The Aether、引用数:1266)

- 5.Emerging Properties in Self-Supervised Vision Transformers(執筆:Meta、引用数:1219)

2020

- 1.An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale(執筆:Google、引用数:11914)

- 2.Language Models are Few-Shot Learners(執筆:OpenAI、引用数:8070)

- 3.YOLOv4: Optimal Speed and Accuracy of Object Detection(執筆:中央研究院《台湾》、引用数:8014)

- 4.Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer(執筆:Google、引用数:5906)

- 5.Bootstrap your own latent: A new approach to self-supervised Learning(執筆:DeepMind、インペリアルカレッジロンドン、引用数:2873)

過去3年間で最も引用された論文の傾向

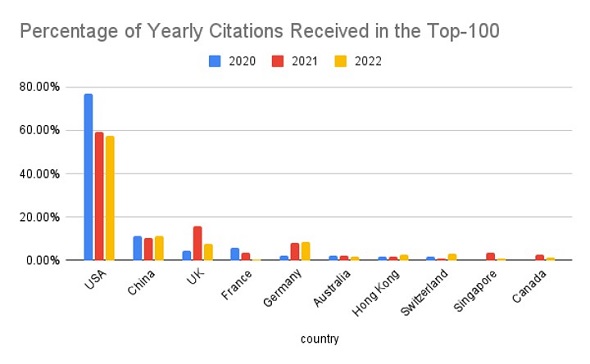

これらの上位被引用論文の出どころを見ると(図1)、米国が3年連続で優勢を保っており、大国間の差は年ごとにわずかに変化する程度であることが分かる。

米国の優位性を適切に評価するために、単なる論文数ではなく、国ごとの累積引用数を示す。年ごとに意味のある比較ができるよう、1年当たりの総引用数で正規化する。

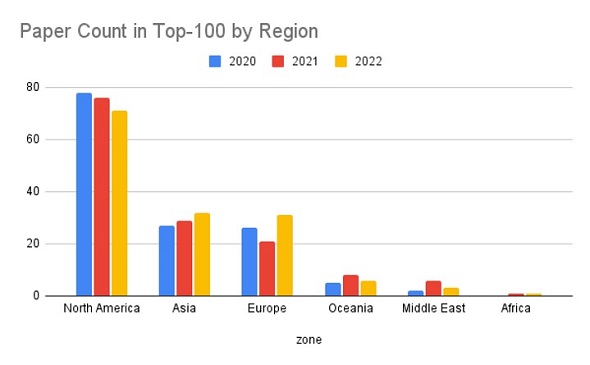

米国と中国以外では、明らかに英国が最も強いプレイヤーといえる。しかし、英国の貢献度は2022年に、それまでの年(60%)よりもさらに高い割合をDeepMindが占めている(英国全体の69%)。地域ごとに見ると、北アメリカが大きくリードしており、アジアはわずかにヨーロッパよりも上回っている。

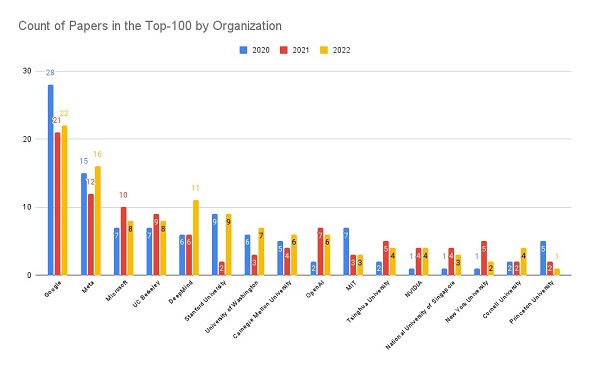

トップ100における主要組織の論文数を比較する。

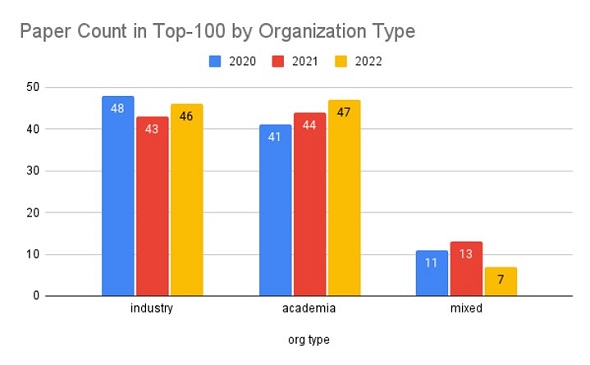

Googleが一貫して最強のプレーヤーで、Meta、Microsoft、カリフォルニア大学バークレー校、DeepMind、スタンフォード大学と続く。最近のAI研究は産業界が主導権を握っており、個々の学術機関はそれほど大きなインパクトを生み出していないが、数は多いため、組織タイプで集計すると、均等になる。

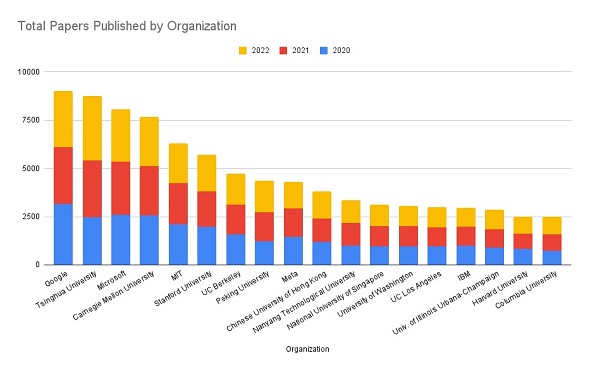

この3年間でどれくらいの論文が発表されたのか

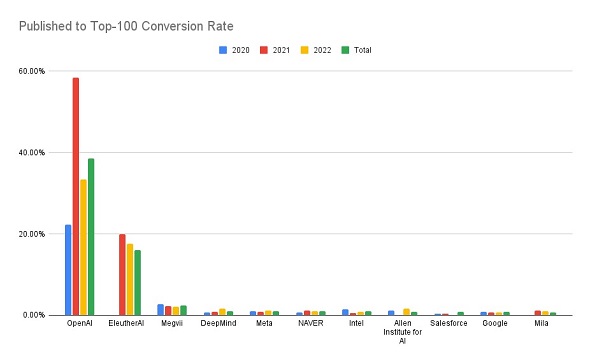

総論文数では、Googleがまだリードしているが、引用数トップ100と比較すると、他との差はかなり小さくなっている。出版量のトップ20の中にOpenAIやDeepMindは見当たらない。これらの機関は、出版量は少ないが、インパクトは高い。図7は、組織が出版した論文が引用数トップ100に入る割合を示している。

OpenAIは、出版する論文を絶対的な大作にすることに関して、抜きん出ていることが分かる。OpenAIのマーケティングの魔法が彼らの人気を押し上げるのに役立っているとは確かだが、最近の研究の幾つかは、優れた品質であることは否定できない。論文数は少ないが、素晴らしい被引用数論文トップ100への掲載率を誇るのは、大規模言語モデルの解釈可能性とアライメントに焦点を当てた非営利団体、EleutherAIだ。

2022年、最も引用された論文トップ100

最後に、トップ100のリストそのものを、タイトル、引用回数、所属先とともに紹介する。

最も引用された論文トップ100の調査方法

Zeta Alphaプラットフォームで年ごとに最も引用された論文を収集し、最初の出版日(通常はarXivのプレプリント)を手動でチェックし、論文を正しい年に配置する。このリストを、Semantic Scholarで被引用数の多いAI論文を検索して補足した。これにより、主に、インパクトのあるクローズドソース出版社(Nature、Elsevier、Springer、その他のジャーナルなど)の論文を追加できた。次に、各論文の代表的な指標としてGoogle Scholarでの被引用数を取り上げ、この数で論文をソートして、その年のトップ100を得る。これらの論文について、GPT-3を用いて著者、所属、国を抽出し、その結果をZeta Alphaが手作業で確認した(出版物から国が明確に分からない場合は、組織の本社の所在国を採用)。複数の所属の著者を持つ論文は、それぞれの所属について1回ずつカウントした。

関連記事

画像データベースの想定外使用がAIアルゴリズムにバイアスをもたらす恐れ――米大学研究チームが明らかに

画像データベースの想定外使用がAIアルゴリズムにバイアスをもたらす恐れ――米大学研究チームが明らかに

「オープンソースデータセットが想定外の方法でAIアルゴリズムのトレーニングに適用されると、そのアルゴリズムは、機械学習のバイアスに影響されて整合性が損なわれ、出力の精度が低くなる恐れがある」。カリフォルニア大学バークレー校とテキサス大学オースティン校の研究者のチームが、新しい研究によって明らかにした。 Microsoft、AIモデルが生成したコードの正確性を向上させる「Jigsaw」を紹介

Microsoft、AIモデルが生成したコードの正確性を向上させる「Jigsaw」を紹介

Microsoftは、AIモデルによるプログラムコードの生成性能を向上させる新しいツール「Jigsaw」を紹介した。 近畿大学の研究グループが「開発効率が良くなる検索方針」に関する論文発表

近畿大学の研究グループが「開発効率が良くなる検索方針」に関する論文発表

近畿大学理工学部情報学科の准教授を務める角田雅照氏らの研究グループは、ソフトウェア開発に向けた効率的なインターネットの検索方針に関する論文を発表した。「効率的な検索方針が明らかにできれば生産性を向上させられる」という。

関連リンク

Copyright © ITmedia, Inc. All Rights Reserved.